EAGER: Link Free Graph Visualization for Exploring Large Complex Graphs

Team Members:

Jing Yang, Associate professor; Yujie Liu, Yang Chen, and Scott Barlow, PhD students (Visualization)

Jianping Fan, Associate professor; Yi Shen and Chunlei Yang, PhD students (Online Multimedia Network Construction)

Collaborators:

Xiaoru Yuan, Professor; Xin Zhang, Master student (Peking University)

Ye Zhao, Assistant professor; Jamal Alsakran, PhD student (Kent State University)

Shixia Liu, Lead researcher (Microsoft Research Asia)

Support:

NSF IIS-0946400 PI: Jing Yang and Jianping Fan

Overview

Graph visualization is widely used in a large number of applications, such as social network analysis, financial analysis, bioinformatics, and web information exploration. The dominating Node-Link Diagram (NLD) graph visualization techniques suffer from the following problems: (1) becoming cluttered when visualizing large graphs; (2) having limited applicability to multivariate graphs; (3) lacking interactivities for conducting complex graph analysis tasks. To address the above challenges, this project has explored a new research direction called Link Free Graph Visualization (LFGV). LFGV uses multidimensional visualization and interaction techniques, rather than NLDs, to interactively explore the structural and attribute information carried by a graph. By discarding the links, LFGV reduces the clutter and gains more flexibility in interactions. In this project, we have developed several proof-of-concept LFGV prototypes and developed several novel approaches for automatically constructing weblog networks and other online information networks.

Datasets

1. http://coitweb.uncc.edu/~jyang13/datasets/nyt.gml. The corpus contains 1,078 New York Times world news articles between February 22, 2011 and April 25, 2011. The network contains 1,200 vertices, 7,042 edges. Each vertex is a tag and each edge is associated with ids of news article containing both tags on its both ends. The news articles can be accessed through URLs stored in this file. Node attributes indicate the types of the tags and the time when it appeared.

2. The co-author network of 1,462 authors. This network is generated from 4,526 bibliography entries collected by Xiaoru and his students from the DBLP Computer Science Bibliography. All of them are authored / coauthored by 36 researchers who served on the program committee of the IEEE conference on Information Visualization. Note that although this dataset covers co-author information collected from 4,526 papers, there are missing links among those researchers who are not used to generate the dataset. The network contains keyword information of papers written by the authors (as node attributes). However, they are obvious incomplete and we suggest to discard this information.

PIWI - Pixel-Oriented Graph Visualization

PIWI employs Pixel-Oriented techniques1, the most scalable multidimensional visualization technique, and inherits its good scalability (it scales to graphs with thousands of nodes). It also employs tag clouds so that detail information can be accessed with ease. PIWI allows users to carry out the following tasks: (1) to examine graph communities (clusters) within a large graph, learn their semantics (labels), and explore their relationships to the rest of the graph; (2) to select a large number of nodes simultaneous by one mouse click, conduct advanced selections according to complex structural and attribute criteria, manipulate selected nodes, and perform progressive visual exploration upon selection results (e.g., constructing new communities using selection results); (3) to visually explore the relationships between node attributes and the graph structure.

[1] D.A. Keim and H.-P. Kriegel. Visdb: Database exploration using multidimensional visualization. IEEE Computer Graphics & Applications, 14(5):40-49, 1994.

The following visual analytics pipeline is implemented in PIWI: (1) constructing node groups by automatic graph community analysis or user interactions; (2) for each group, visually presenting their node labels and distances to the rest of the graph; and (3) users interactively explore the graph, select nodes based on their group memberships, semantics, and distance information, and construct new groups for further visual exploration. To increase the scalability of PIWI, we have accelerated it using GPU techniques and achieved real time interactions for graphs with over ten thousand nodes.

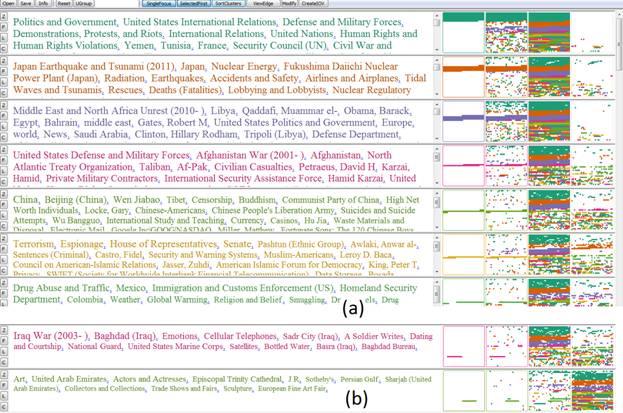

The NYT tag co-occurrence network in PIWI. (a) Browsing the largest clusters in the graph. More clusters can be examined using the scrolling bar. (b) Comparing the significance of two clusters with similar sizes from the pixel oriented displays.

Papers:

J. Yang, Y. Liu, X. Zhang, X. Yuan, Y. Zhao, S. Barlowe, and S. Liu: PIWI: Visually exploring graphs based on their community structure. IEEE Transactions on Visualization and Computer Graphics, to appear. (full paper) (video)

Software:

The free software PIWI for visualizing large graphs will be available soon.

Newdle - Online News Graph Visual Analytics

Newdle is a variation of PIWI customized for online news exploration. It uses online NYT news RSS feeds as its input. Newdle constructs a news article network according to their tag similarities and visually presents this graph to users using LFGV. Similar to PIWI, clusters are constructed and their semantics are visually presented to users as tag clouds. Different from PIWI, the multivariate display is hidden so that more screen space can be contributed to the semantics. Information such as the neighborhood of clusters or nodes is retrieved through interactive visual explorations.

The overview of a news article graph with thousands of nodes. The semantics of the clusters are displayed.

The relationships between a focus cluster (on the top) and other clusters. Three stars indicate a direct link and two stars indicate an indirect link.

Papers:

J. Yang, D. Luo, and Y. Liu: Newdle: Interactive Visual Exploration of Large Online News Collections, IEEE Computer Graphics & Application 30(5): 32-41 (2010).

Y. Liu, S. Barlowe, Y. Feng, J. Yang, and M. Jiang: Evaluating exploratory visualization systems: A user study on how clustering-based visualization systems support information seeking from large document collections. Information Visualization, accepted. (full paper)

Visualizing Temporally Evolving Insight Networks

We have applied the LFGV idea to visualize temporally evolving insight networks. The application background of this work is to support asynchronous collaborative visual analytics. In this application, insights generated by multiple users over a long period of time are automatically associated to form an insight network. Users visually explore this evolving network for hypothesis generation and evaluation. Two link free techniques are generated here: (1) dynamic visualization using a force-based dynamic model (see the StreamIT website at Kent State University). In particular, nodes are dynamically inserted into the 2D projection of the graph according to their time stamps. Their distances in the 2D display visually represent their distances in the graph. Clustering is conducted on the fly and the clustering results are dynamically updated when more nodes are inserted. Tag clouds visually represent the semantics of the clusters; (2) a regional graph that allows users to visually analyze the relationships between two groups of nodes in a multidimensional space. The visualization would be much more cluttered and the temporal evolution would be much harder to examine if traditional NLDs were used in this application.

Insight management in asynchronous collaborative visual analytics

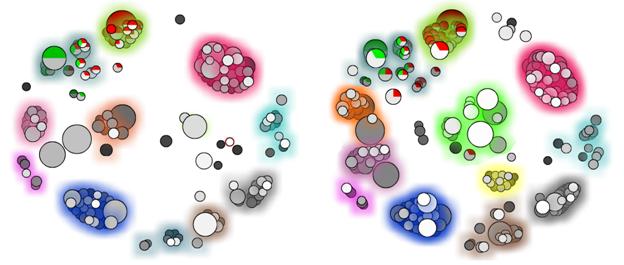

Two screenshots of the animation of an evolving network. Significant clusters are highlighted by colors.

Comparing and associating two groups of insights using regional graphs.

Papers:

Y. Chen, J. Alsakran, S. Barlowe, J. Yang, and Y. Zhao: Supporting effective common ground construction in asynchronous collaborative visual analytics. Accepted by IEEE VAST 2011. Video

J. Alsakran, Y. Chen, D. Luo, Y. Zhao, J. Yang, W. Dou, and S. Liu: Real-time Visualization of Streaming Text with Force-Based Dynamic System, IEEE Computer Graphics & Application, to appear. Video

J. Alsakran, Y. Chen, Y. Zhao, J. Yang, and D. Luo: STREAMIT: dynamic visualization and interactive exploration of text streams. Proc. IEEE Pacific Visualization Symposium 2011, pages 131-138.

Software:

StreamIT will be available to the public soon.

Weblog and Other Online Information Network Construction

Dr. Jianping Fan and his students have conducted exciting work with the support of this project, such as social medial analysis and retrieval and visual concept network generation and visualization. Here is a list of their relevant publications:

J. Fan,

Y. Shen, C. Yang, N. Zhou, ``Harvesting

Large-Scale Weakly-Tagged Image Databases from the Web", IEEE Conf.

on Computer Vision and Pattern Recognition (CVPR'10), 2010.

Y. Shen, J.

Fan, ``Leveraging

Loosely-Tagged Images and Inter-Object Correlations for Tag

Recommendation", ACM

Multimedia, 2010.

H. Luo, J. Fan,

Y. Zhou, ``Multimedia news

exploration and retrieval by integrating keywords, relationsand visual

features", Multimedia

Tools and Applications, vol.51, pp.625-648, 2011.

J. Fan, Y. Shen, C. Yang, N. Zhou, ``Structured

Max-Margin Learning for Inter-Related Classifier Training and Multi-Label Image

Annotation", IEEE

Trans. on Image Processing, 2010.

Y. Gao, C. Yang, J.

Fan, ``Incorporate Visual

Analytics to Design a Human-Centered Computing Framework for Personalized

Classifier Training and Image Retrieval", LNCS,

2009.

Z. Li, H. Luo, J.

Fan, ``Incorporating Camera

Metadata for Attended Region Detection and Consumer Photo

Classification", ACM

Multimedia (MM'09), Beijing, 2009. A long version is also published

onMultimedia Tools and Applications, 2010. Journal

version

J. Fan, H. Luo, Y. Shen, C. Yang, ``Integrating Visual

and Semantic Contexts for Topic Network Generation and Word Sense

Disambiguation", ACM Conf.

on Image and Video Retrieval (CIVR'09), 2009.

Y. Gao, J. Peng, H. Luo, D. Keim, J.

Fan, ``An Interactive

Approach for Filtering out Junk Images from Keyword-Based Google Search

Results", IEEE

Trans. on Circuits and Systems for Video Technology, vol. 19, no.10, 2009. Some

preliminary results are also presented at MMM'08. Online

demo is available at Junk Images filtering

demo

J. Fan, D.A. Keim, Y. Gao, H. Luo, Z. Li, ``JustClick:

Personalized Image Recommendation via Exploratory Search from Large-Scale

Flickr Images", IEEE

Trans. on Circuits and Systems for Video Technology, vol. 19, no.2, pp.273-288,

2009.

For more information of the research of Dr. Fan and his group, please visit Dr. Fan's website.