4. Coreference in mathematical statements

Background: In response to the COVID-19 pandemic, the Allen Institute for AI, in collaboration with leading research groups, has prepared and distributed the COVID-19 Open Research Dataset (CORD-19), a free resource of over 47,000 scholarly articles, including over 36,000 with full text, about COVID-19 and the coronavirus family of viruses for use by the global research community.

Objective: Solve one of the challenge tasks proposed on Kaggle. For example, train a deep learning model for identifying COVID-19 risk factors. This can be approach as a sequence classification problem, where words that correspond to risk factors, e.g. diabetes>, would be labeled as positive. Feel free to propose other tasks based on this data, as long as you can argue the proposed task is important. Under "Additional Resources", you can find other datasets that may be support a deep learning project.

2. Humor recognition and generation

Background: As pointed out by West and Horvitz, AAAI 2019, "Humor is an essential human trait. Efforts to understand humor have called out links between humor and the foundations of cognition, as well as the importance of humor in social engagement. As such, it is a promising and important subject of study, with relevance for artificial intelligence and human–computer interaction".

Objective: Solve the SemEval 2020 task on Assessing the Funniness of Edited News Headlines. This requires either predicting how humorous a given headline is, or determining which of two edited version of a headline is funnier. A harder, but also more interesting and useful task would be take as input a serious headline, and output an edited version were one word was changed to make it funny. The dataset is described in this paper by Hossain et al., NAACL 2019.

3. Train GANs to generate surprising outputs

Background: Deep generative architectures have achieved impressive results in domains ranging from image generation, to music composition, to text generation. While they can generate highly realistic samples that have never been seen during training, they have very limited capacity for producing outputs that are truly novel or surprising. Surprise however is a powerful driver for discovery and creativity, which in turn is widely considered to be an essential component of intelligent behavior.

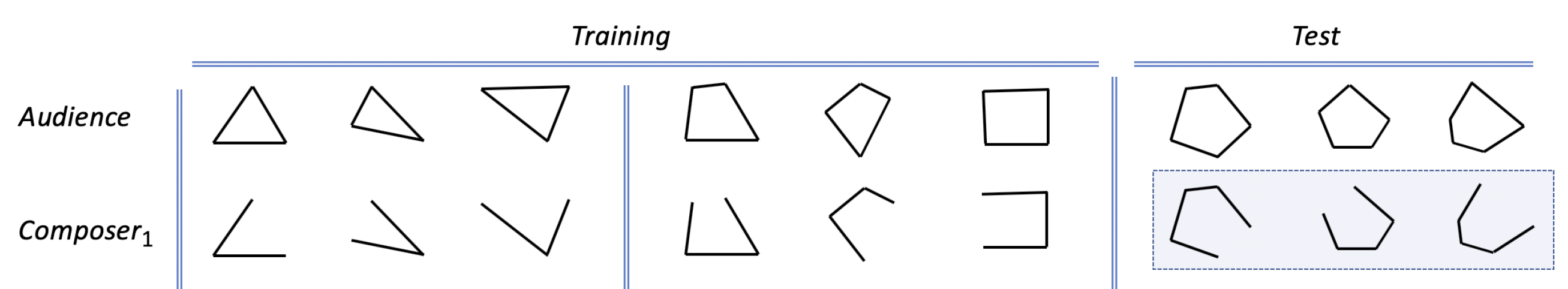

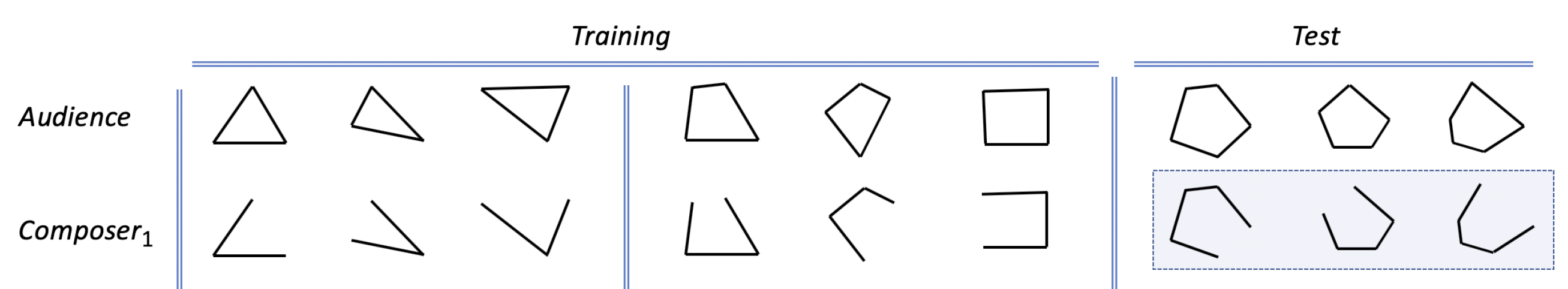

Objective: Train a two-model generative architecture that can learn patterns of expectations and surprise from the data. One artificial dataset to experiment with is shown in the image below, where an audience GAN is train to generate polygons. A composer GAN is then trained to generate triangles and quadrilaters with a missing side, using as input hidden states computed by the audience model. At test time, the audience model trained on pentagons is plugged in the composer, which should generate polygons with a missing side. For more details, contact me.

4. Coreference in mathematical statements

Objective: Adapt an existing ML-based coreference resolution system to solve coreference in mathematical statements, e.g. proofs, as shown on the slides 23 to 32 in this lecture . This would entail some manual annotation of mathematical proofs (some already done), designing and implementation of coreference features that are specific to math statements, adding these features in the existing ML-based system and training and evaluating the resulting system. A substantial amount of work has already been done. For more details, contact me at <bunescu@ohio.edu>.

5. Extraction of text relevant to a citation

Objective: Given a citation of paper C in another paper P, extract all the sentences in P that are directly relevant to the paper C, i.e. they mention C or they discuss content (methods, results) from paper C. For this, you would have to create a dataset and design and train a deep learning model.

6. Explainable Sequence Models

Objective: Use a method such as Layerwise Label Propagation or design your own method for determining which words in an input sequence (e.g. sentence, document) are most relevant for the classification decisions of an RNN-based or Transformer-based classifier. An interesting example is shown in this ACL 2019 paper. A good project would try to obtain a better understanding of these models through novel analyses or by developing new ways of probing them.

7. Mapping comments to code

Background: Many programming tasks are routine and repetitive. It would be useful to have a "program synthesis" model that takes as input a problem description in natural language (English) and outputs code that solves that problem. One approach for building such a module would be to train it on a dataset of (English, code) pairs, but then the question is where to get a large dataset of such training examples. One possible answer is in the comments. The manual labeling of code with English descriptions is routinely done by programmers, so one could create a dataset for training an automatic program synthesis system by extracting (comment, code segment) pairs from large software projects. Given a comment, the start of the corresponding segment is right after the comment. The end of the code segment however can be less clear, especially for fine-grained comments inside functions.

Objective: Train a model that takes as input a comment in a source code file and determines the corresponding code segment. A possible approach is to use a RNN to train a classifier that first optionally goes over the comment, than goes over the lines of code following the comment and classifies them as either being associated with the comment (positive) or not (negative). At test time, the RNN would stop at the first line of code that it classifies as negative. The system could be trained on raw sequences of code tokens, or on higher level features associated with a line of code that exploit the syntactic structure of the code e.g. is this a new line, is this line at the same level as the first lien after the comments, is there another comment immediately following this line.

A dataset and initial feature-based approach have already been developed by Nidal Abuhajar <na849215@ohio.edu> and Shuyu Gong <sg699317@ohio.edu>.

8. Behavioral context recognition in-the-wild from mobile sensors

Background: Large amounts of time series data are generated nowadays from sensors that are integrated in smartphones or wearable bands. Behavioral context recognition refers to the use of sensor data to automatically detect user behavior e.g. whether the user is eating, walking, biking, or surfing the web. There are publicly available datasets with labeled sensor data, some of them listed below.

Objective: Train a model that takes as input a time series of sensor data and determines whether the user is having a particular type of behavior at the current time. You can use the two datasets below:

Background: Neural networks are universal approximators, so they can represent any function, modulo some general constraints. When augmented with explicit memory, e.g. Differentiable Neural Computers (DNC), they become Turing complete, so they can efficiently represent any program. While they can represent any input-output mapping, it is unclear how complex their architectures need to be and whether the mapping can be learned using gradient-based algorithms.

Objective: Is it possible to define and train a neural network such that it learns to output the k-th prime number, given k as input? The project will be a deep investigation into what it would take for this to be possible -- or a proof that it is impossible, with any type of neural network. Possible directions for exploration:

Background: Kaggle is a web platform for hosting data mining / predictive modelling competitions.

Objective: Create and evaluate a machine learning solution for one of the active competitions. And maybe become rich in the process.